I don’t know what do you think about containers and orchestration tools, but for me it’s been a while since I started discussing on those topics on forum and various platforms, and I can’t say It’s an easy discussion.

To be honest I’m quite tired of repeating the same concepts so I thought this blog could be help me to express my point of view, at least in future I only have to copy and paste this post url and not waste time anymore :)

For someone my point of view on containers and microservices could sound a bit grumpy, but I can assure that I’m not against them, or think they are bad or simply some nasty trend.

First of all It’s imperative to distinguish between two main scenarios: development/test and production environment.

For the first one containers are wonderful, they are the Nirvana and Shangri-La all together, they give developers the opportunity to setup dev environment in no time, no setups, no hard requirements, everything is perfectly consistent and works in the same way as the tools they are familiar with (think about git or other versioning softwares), everything is perfectly reproducible on whatever platform, their work pc, home pc, a server, a laptor, everything.

For production… well It’s not the same.

First of all: the most important requirement for a production environment is reliability, then comes security, then everything else.

Literature, research, experience and logic taught us that these two basic requirements could be reached only following the KISS principle (Keep It Simple Stupid) which is not a joke, It’s real, It’s true and It works, period.

Second, when your application moves from development to production It moves from developers to sysadmins, It’s the sysadmin the guy which must maintain It, that must provide resources and guarantee that the application (and all its requirements) are working, are reliable and kept secure.

Third: if you think about the lifecycle of an application most of It is in production, an application could require a few months of development but will remain online for years, and usually the more It will require for the development the more It will remain in production.

Following the KISS principle means REDUCE COMPLEXITY, containers ADD COMPLEXITY :\

This may sound strange to containers users because “well it’s a piace of cake, launch a couple of commands and you’re ready to go”, well stop for a moment and think about it.

- On a “legacy” environment you have, applications (your site, your database, etc etc) on top of services (webservers, application servers, rdbms, etc etc shared by several applications) on top of an OS (shared by several services) on top of some sort of hardware (which could be very complex in production, and could be shared between several OSs if you’re using virtual machines).

- With containers and orchestrators (like Kubernetes or Openshift) you have a LOT more complexity, you still have your applications, on top of services (which are the same as before for instance…), on top of containers, on top of a container environment (for example Docker), on top of orchestrators, on top of a OS (usually installed on a vm, so add also the hypervisor complexity) on top of hardware.

More complexity means less reliability and less security, or a least a lot more work and variables to manage to reach the same level of those requirements.

Like everything there are pros and cons, and someone could argue that beside that added complexity containers and orchestrators give you a lot of benefits, mainly:

- reproducibility

- horizontal scalability (adding more “nodes” and distribute load across them).

We already talked about the first before, It’s awsome for a developer, but for a sysadmin on a production environment?

Well, not really, simply we don’t need it because move an application between different environments is really rare (in 20+ years of IT consultant work It happened to me only one time, moving and Oracle 10g rdbms from Windows Server 2003 to RHEL) and usually any service have its own backup and restore procedures to accomplish this goal.

Scalability is another story, in theory it’s an awsome thing, in real world it’s not a big deal in 99% of companies or services.

The idea of having some black magic that will add more and more instances of your application and distribute the load on those is good and managers love it, they simply think this is the solution for every problem because, let’s be honest, they don’t understand the complexity behind an application and they think that all the problems come from lack of resources.

In the real world any experienced sysadmin can confirm that usually resources are enough and when an application have problems it’s all about some bug, exception, unmanaged situations (for example the application use a third party service which doesn’t work and the application does not manage it), all those things can lead to a slow or unresponsive application even if you have a lot of resources available.

Adding more and more application instances and balance load across them will lead to a simple result: more and more exceptions.

Ok, let’s ride our fantasy horse and think about a bug free application, can we scale up with containers with no worries?

Sure you can, but do you really need it?

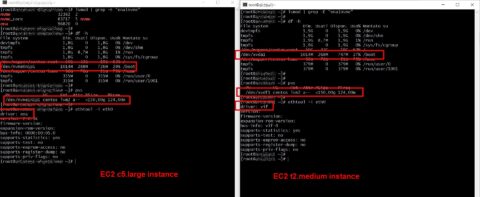

For 99% of companies nowadays it’s really rare that an application don’t have enough resources, or have such a huge amount of requests to run out of resources.

If you are Google, Facebook, Netflix, Amazon or any other global huge company maybe you really need horizontal scalability, so orchestrators and containers are very useful (it’s not surprising that one of the most popular orchestrators, Kubernetes, came from Google), otherwise… well no, you don’t need it.

So that’s all?

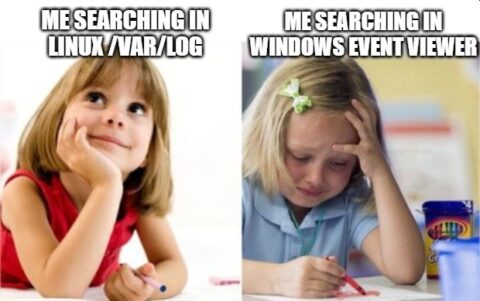

No, there are a lot of smaller pros and cons (most cons to be honest imho…) on this topic, like security, access on third party resources, logging, service redundancy vs consolidation and many more.

Most of these are huge problems with containers (and most of the times they are ignored) while with a traditional architecture they simply aren’t, even a simple stdout append on a log is a pain in the ass with containers, and require to add a lot of complexity to reach this simple goal (remember KISS principle).

Let me add a small personal thought on this subject that can be extended in many other areas of the IT universe.

As you probably understood I think that containers are a really good tool for developers and not a good one for sysadmins, containers are born from developers for developers.

Why people always think that a development tool should work for production?

Why people don’t think from a production perspective when they develope tools for production?

If you are a developer and you’re working on a tool that will be used in production, please ask your sysadmin what does he think about it, what’s its requirements, what are the problems that he usually have in production and he need to fix.

Maybe if we start to develope tools from the right perspective we’ll have better results, otherwise we will continue to have always the same problems.

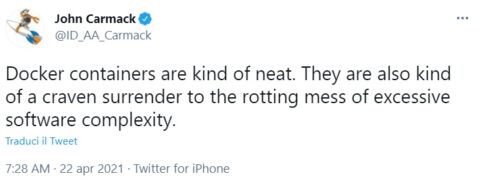

[EDIT 23/04/2021]

Let me just add a little contribute from John Carmack, one of the greatest developers of all times, the father of DooM and Quake, and in general of modern FPS videogames.

As you may heard on march 10th

As you may heard on march 10th

![[ Celebrate 30 years of GNU! ]](https://tasslehoff.burrfoot.it/wp-content/uploads/2013/11/GNU_30th_badge.png)