16/03/2024

RPi4 power consumption

It’s been a while since a started using a Raspberry Pi 4 as a home server instead of my old Banana PI, yesterday I was following a interesting thread on a forum regarding RPi5 compared to some x64 boards, specially those using Intel N100 processor.

This thread made me remember the good old days when I started using my ancient Via Epia board and I won a forum competition for the home server with the lowest possible power consumption.

Now seems like people complains about RPi because of its cost and it’s performance/power ratio compared to other boards, like those using Intel N100, and this pushed me to check out my beloved Pi power consumption, let’s bust some myths!

Before starting let me roughly explain how I use my RPi4, just to clarify that it’s not an idle server stuck in a closet to absorb electricity:

- backup server (I keep the main backup copy on the Pi, every night I start a second server via PoE and I sync any backup on it and on a Backblaze B2 bucket)

- hosting server (a few sites, mainly based on PHP cms and published via Cloudflare Tunnel)

- personal wiki with Bookstack

- PiHole server

- phpIPAM server

- Nagios server to monitor my network and my devices

- Collectd + Collectd Graph Panel to monitor resources usage

- Uniquiti Unifi Controller to manage my Wifi APs

- Document management system with Paperless NGX

- Wireguard server for VPN

- Gitea as my private git repository

- Webmail server with Roundcube to get any notification from my cron jobs and devices on LAN

- Immich as a backup server for media on mobile devices

- Jellyfin server

- Vaultwarden as password manager

- several LAMP stacks here and there to try things, web projects etc etc…

Not let’s take a look to my setup:

- Raspberry Pi 4B with 8GB of ram

- Crucial BX500 240GB SATA SSD as a boot device via usb on the RPi4

- Seagate ST1000LM024 1TB SATA 2.5″ hard drive as a data volume connected via usb to the RPi4

To measure power consumption I used a Shelly Plug and an external self powered USB hub for measuring each usb drive.

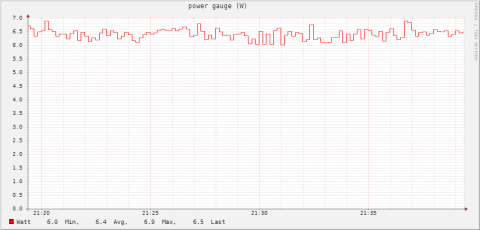

This is my RPi4 power consumption on idle with the two usb drives, as you can see we’re on a 6.4W average, not bad…

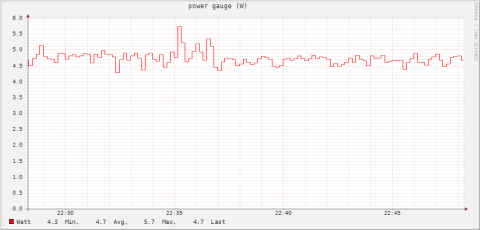

Here is the Pi with only the SSD, around 4.7W

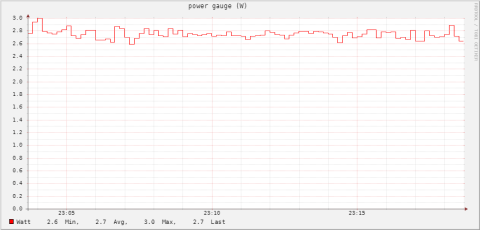

And here’s the mechanical HDD by itself at around 2.7W

As you can see the sum of the Pi+SSD (4.7W) and the HDD (2.7W) is over the average power consumption of the three devices all together (7.4W vs 6.4W), and that’s pretty normal, attaching the HDD directly to the Pi and power it via Pi’s usb ports is more efficient, and does not have the overhead of the usb external hub (which probably is less efficient as the Pi and its PSU).

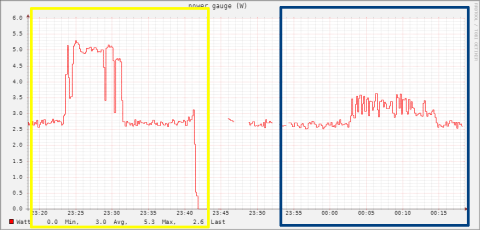

Let’s continue with the hard drives, in this test I measured the power consumption of the SSD and the HDD powered via the USB external hub.

This test imho is particularly interesting because it show something unexpected to me, the yellow box shows the HDD test while the blue box shows the SSD test.

As you can see the idle power consumption is not that different between the two drives (~2.7W), and that’s shocked me because I thought the SSD would be much more energy efficient and less power hungry. If we move to the stress test we can se a huge difference between the two, and that’s what I was expecting to see.

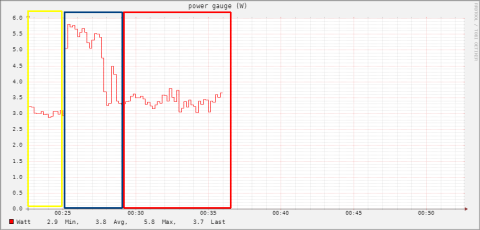

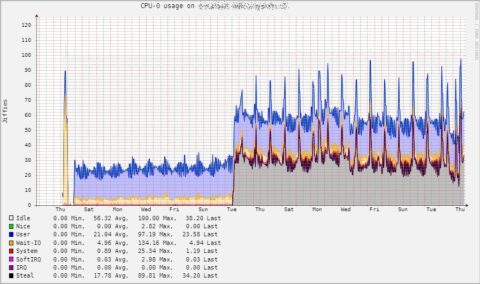

And finally we can see how the application load really impact on the power consumption.

For this test I measured only the Pi power consumption without SSD or HDD, in the first section (marked in the yellow box) you can see the Pi consumption on idle with only the basic OS (Debian 11) running on it, then I started all the application I usually run on it, all together (check the spike in the blue box), then finally the Pi with all the services running on it (red box).

As you can see there’s no such difference between an idle system with basically no services running on it, and the system with all the services I mentioned at the beginning running on it.

Obviously we’re talking about a home server solution, with basically one user and a few hosted sites (but we’re still talking about small WordPress sites with a few Matomo instances and not a lot of visitors)

I hope this will be helpful if you’re thinking about buying a Raspberry Pi as your own home server.

03/04/2020

AWS EC2 instance migration

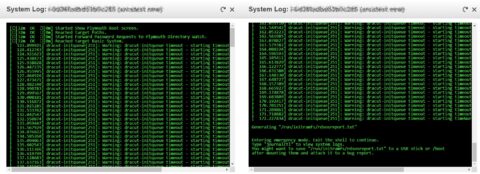

Recently I received some complanings about load problems on an AWS EC2 t2.medium instance with CentOS 7, despite being a development environment it was under heavy load.

I checked logs and monitoring and excluded any kind of attack, after a speech with the dev team it was clear that the load was ok for the applications running (some kind of elasticsearch scheduled bullshit).

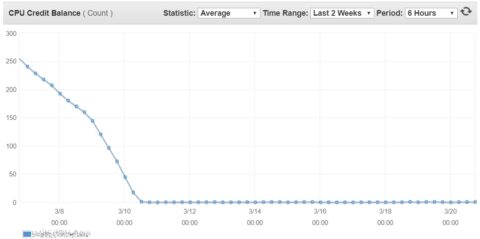

The load was 100% from cpu but I noticed some interesting behavior since a couple of weeks with a lot of steal load.

Looking to EC2 CPU Credits it was crystal clear that we ran out of cpu credits, which turned on some heavy throttling.

Since the developers can’t reduce the load from the applications and the management won’t move from EC2, the solution I suggested was to move to a different kind of instance specifically designed for heavy computational workloads and without cpu credits.

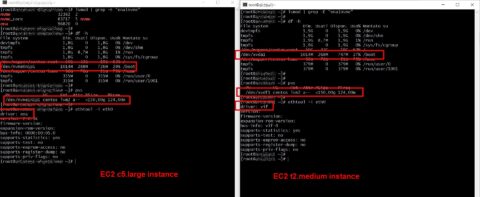

So I made some snapshots and launched a new C5 instance, piece of cake, right?

Well no… as soon as I started the new instance it won’t boot, and returned “/dev/centos/root does not exist” on the logs. :\

So what’s going on here?

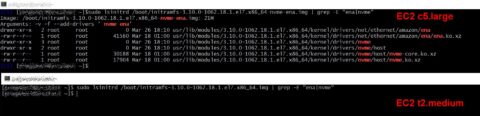

Pretty simple, there are significant hardware differences between each type of EC2 instance, for example EC2 C type instances have NVMe SSD storage which require a specific kernel module, same for the network interface with ENA module.

The goal here is to make a new init image with these two modules inside, so during the boot the kernel could use these devices, and find a usable volume for boot and nic for network; the only problem is that we can’t simply boot the system using a live distro and build a new init image with those modules already loaded, remember we’re on AWS not on a good old Vmware instance (sigh…).

First of all I terminated the new instance, it was basically useless, and got back to the starting T2 instance.

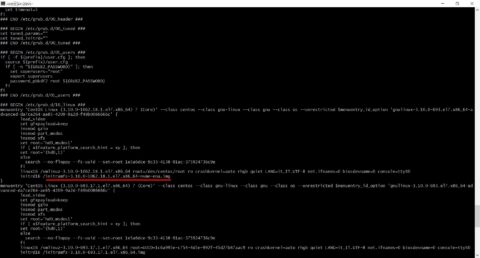

Check which kernel version you’re using with “uname -a” and build a new init image including nvme and ena modules using mkinitrd, for example:

mkinitrd -v --with=nvme --with=ena -f /boot/initramfs-3.10.0-1062.18.1.el7.x86_64-nvme-ena.img 3.10.0-1062.18.1.el7.x86_64

Using lsinitrd you can check that your new init image has nvme and ena module files inside.

Now you have to edit your grub config file (/boot/grub2/grub.cfg) and change your first menu entry switching from the old init image to the new one.

Save /boot/grub2/grub.cfg file, CHECK AGAIN YOU HAVE A GOOD SNAPSHOT OR AN AMI, and reboot, nothing should have changed.

Now you can make a new snapshot or AMI and build a new instance from it, choose a C type instance and now it should be able to boot properly.

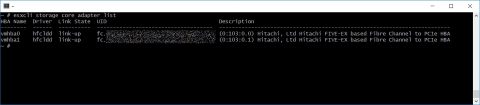

As you can see the new C5 instance have different storage device names, it has a new nic driver (ena) and it has ena and nvme modules loaded.

Life should be easier without the cloud… again.

13/08/2018

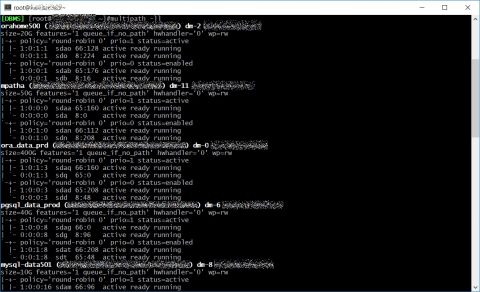

Quick check multipath status

GNU/Linux

On GNU/Linux checking multipath status is very easy, you’ll only need to run “multipath -ll” and you’ll get the status of each path for every multipath device on your server. Regarding HBA all you need to know is under /sys/class/fc_host directory where you’ll find one host* directory for each device, inside those directories you’ll find port_name and node_name with WWPN and WWN.

With basic bash skills and ssh you can easily grab those information on each server, this is a trivial example.

Regarding HBA all you need to know is under /sys/class/fc_host directory where you’ll find one host* directory for each device, inside those directories you’ll find port_name and node_name with WWPN and WWN.

With basic bash skills and ssh you can easily grab those information on each server, this is a trivial example.

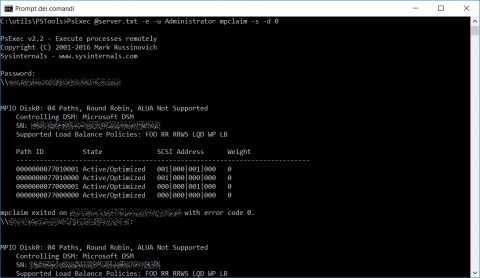

Windows

The only requirement is the fantastic and free PsExec utility from Mark Russinovich Edit a text file with a list of all your server’s ip or hostnames, one per line (server.txt). PsExec @server.txt -e -u <USERNAME> mpclaim -s -d <DEVICE ID> If you want to see all the details (for example node number and port number) of your HBA launch Get-InitiatorPort command on a Powershell instance with superuser grants.

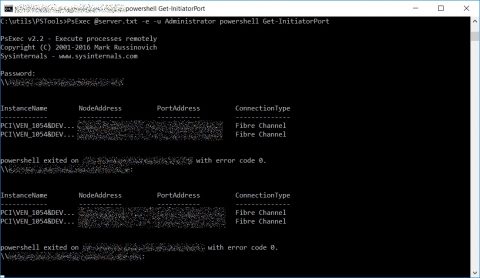

PsExec @server.txt -e -u <USERNAME> powershell Get-InitiatorPort

If you want to see all the details (for example node number and port number) of your HBA launch Get-InitiatorPort command on a Powershell instance with superuser grants.

PsExec @server.txt -e -u <USERNAME> powershell Get-InitiatorPort

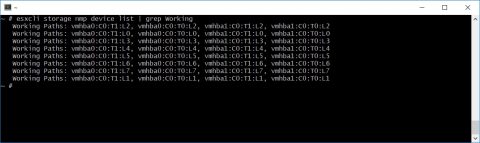

Vmware ESXi

First of all you must enable ssh daemon on each Vmware host (follow this Vmware KB article), if you want to login with ssh keys follow this KB article. For checking multipath status you must run this command “esxcli storage nmp device list”, the output is quite verbose so it’s better to grab only the information we need adding a nice “| grep Working”, each line shows the paths for every datastore on the Vmware server. You can find WWN and WWPN with “esxcli storage core adapter list”

You can find WWN and WWPN with “esxcli storage core adapter list”

As for GNU/Linux server you can easily cycle through your Vmware servers using ssh and bash to grab those information with a single script.

As for GNU/Linux server you can easily cycle through your Vmware servers using ssh and bash to grab those information with a single script.

20/09/2016

Dell Latitude E7470

Finally I changed my working laptop, 8 years ago I switched from an old IBM ThinkPad R50 (yes! It was a true IBM ThinkPad!) to a T500 ThinkPad from Lenovo.

It was a good pc, not very powerful but sturdy, with a full size keyboard and so many options for upgrade like any other ThinkPad, a war machine!

Now the glorious T500 needs to retire, everything works but I need an SSD, the screen resolution was ridiculous, CPU and RAM were inadequate to run any virtual machine in local, so I started to look around for a new pc, these were the requirements:

- CPU at least Core i5

I don’t need a huge computing power beacause I don’t have to render or compile source (I usually spend most of my working time in an ssh shell) and I don’t want a Boeing 747 fan on my side and a heavy PSU. - RAM at least 8GB

- SSD storage (I think I don’t have to explain why…)

- Display resolution at least 1920×1080 (I don’t want to go crazy with external display for work)

- 14″ chassis (I hate those horrible 15,6″ chassis with the imho useless numbers keypad)

- Business line laptop

I started looking for a laptop with these requirements and I came to the Dell Latitude 5000 series, nice line, solid, realiable and with a great customer care (this is my experience with any Dell product, pc or server).

Sadly I had a bad experience with a Dell partner so I started to looking around for an alternative… but last week one of our historic wholesale providers started to sell Dell products and I found the shiny Latitude E7470 which fits perfectly into my requirements to an honest price… check, check, check!

So, here it is my brand new laptop, my first experience with a Latitude product.

My first impressions:

- it’s thin and light (it’s branded as ultrabook although I don’t think it fits the Intel requirements for that) but it’s super sturdy!

- the display is AWSOME! It fully deserves all the good feedbacks you can find online.

- great I/O and options, It has 3 USB 3.0 ports (not bad for a thin laptop), two display output (mini DP and HDMI), it has uSIM slot and also an integrated smartcard reader.

- nice storage performance (more than 500 MBps in sequential read and more than 250 MBps in sequential write) and I read It’s possible to install a second SSD on another slot.

The only complaint I had is about some keys (for example HOME and END keys which I use a lot) that need the FN key, and obviously the stupid Windows 10 scaling which blurs everything (but this is not a Dell problem).

And yes… I have to use Windows for now… :\

Here is the beast

Ricerca

Categorie

ACHTUNG BABY

Archivio

- Giugno 2025

- Marzo 2025

- Novembre 2024

- Ottobre 2024

- Settembre 2024

- Giugno 2024

- Maggio 2024

- Aprile 2024

- Marzo 2024

- Febbraio 2024

- Settembre 2023

- Settembre 2022

- Giugno 2022

- Gennaio 2022

- Settembre 2021

- Maggio 2021

- Aprile 2021

- Marzo 2021

- Maggio 2020

- Aprile 2020

- Dicembre 2019

- Agosto 2019

- Febbraio 2019

- Novembre 2018

- Ottobre 2018

- Agosto 2018

- Maggio 2018

- Agosto 2017

- Marzo 2017

- Febbraio 2017

- Ottobre 2016

- Settembre 2016

- Luglio 2016

- Giugno 2016

- Aprile 2016

- Gennaio 2016

- Novembre 2015

- Ottobre 2015

- Settembre 2015

- Agosto 2015

- Luglio 2015

- Giugno 2015

- Maggio 2015

- Aprile 2015

- Marzo 2015

- Febbraio 2015

- Gennaio 2015

- Novembre 2014

- Ottobre 2014

- Settembre 2014

- Agosto 2014

- Luglio 2014

- Giugno 2014

- Maggio 2014

- Marzo 2014

- Febbraio 2014

- Gennaio 2014

- Dicembre 2013

- Novembre 2013

- Ottobre 2013

- Settembre 2013

- Agosto 2013

- Luglio 2013

- Giugno 2013

- Maggio 2013

- Aprile 2013

- Marzo 2013

- Febbraio 2013

- Gennaio 2013

- Dicembre 2012

- Novembre 2012

- Ottobre 2012

- Settembre 2012

- Agosto 2012

- Luglio 2012

- Giugno 2012

- Maggio 2012

- Aprile 2012

- Marzo 2012

- Febbraio 2012

- Dicembre 2011

- Novembre 2011

- Ottobre 2011

- Settembre 2011

- Febbraio 2011

- Gennaio 2011

- Dicembre 2010

- Settembre 2010

- Agosto 2010

- Maggio 2010

- Marzo 2010

- Febbraio 2010

- Gennaio 2010

- Dicembre 2009

- Novembre 2009

- Ottobre 2009

- Luglio 2009

- Aprile 2009

- Marzo 2009

- Febbraio 2009

- Gennaio 2009

- Dicembre 2008

- Novembre 2008

- Ottobre 2008

- Settembre 2008

- Agosto 2008

- Luglio 2008

- Giugno 2008

- Maggio 2008

- Aprile 2008

- Marzo 2008

- Febbraio 2008

- Gennaio 2008

- Dicembre 2007

- Novembre 2007

- Ottobre 2007

- Settembre 2007

- Agosto 2007

- Luglio 2007

- Giugno 2007

- Maggio 2007

- Aprile 2007

- Marzo 2007

- Febbraio 2007

- Gennaio 2007

- Dicembre 2006

- Novembre 2006

- Ottobre 2006

- Settembre 2006

- Agosto 2006

- Luglio 2006

![[ Celebrate 30 years of GNU! ]](https://tasslehoff.burrfoot.it/wp-content/uploads/2013/11/GNU_30th_badge.png)