03/04/2020

AWS EC2 instance migration

Recently I received some complanings about load problems on an AWS EC2 t2.medium instance with CentOS 7, despite being a development environment it was under heavy load.

I checked logs and monitoring and excluded any kind of attack, after a speech with the dev team it was clear that the load was ok for the applications running (some kind of elasticsearch scheduled bullshit).

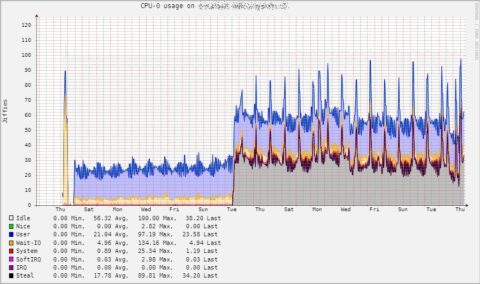

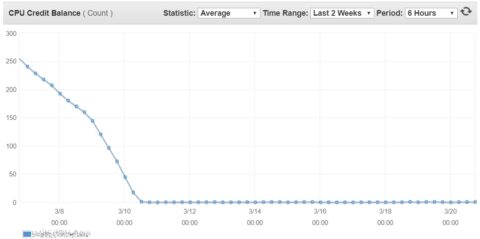

The load was 100% from cpu but I noticed some interesting behavior since a couple of weeks with a lot of steal load.

Looking to EC2 CPU Credits it was crystal clear that we ran out of cpu credits, which turned on some heavy throttling.

Since the developers can’t reduce the load from the applications and the management won’t move from EC2, the solution I suggested was to move to a different kind of instance specifically designed for heavy computational workloads and without cpu credits.

So I made some snapshots and launched a new C5 instance, piece of cake, right?

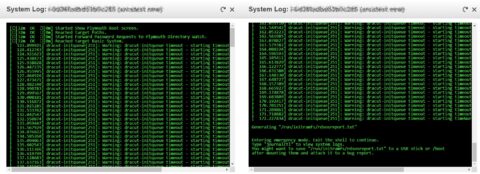

Well no… as soon as I started the new instance it won’t boot, and returned “/dev/centos/root does not exist” on the logs. :\

So what’s going on here?

Pretty simple, there are significant hardware differences between each type of EC2 instance, for example EC2 C type instances have NVMe SSD storage which require a specific kernel module, same for the network interface with ENA module.

The goal here is to make a new init image with these two modules inside, so during the boot the kernel could use these devices, and find a usable volume for boot and nic for network; the only problem is that we can’t simply boot the system using a live distro and build a new init image with those modules already loaded, remember we’re on AWS not on a good old Vmware instance (sigh…).

First of all I terminated the new instance, it was basically useless, and got back to the starting T2 instance.

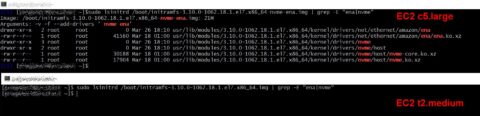

Check which kernel version you’re using with “uname -a” and build a new init image including nvme and ena modules using mkinitrd, for example:

mkinitrd -v --with=nvme --with=ena -f /boot/initramfs-3.10.0-1062.18.1.el7.x86_64-nvme-ena.img 3.10.0-1062.18.1.el7.x86_64

Using lsinitrd you can check that your new init image has nvme and ena module files inside.

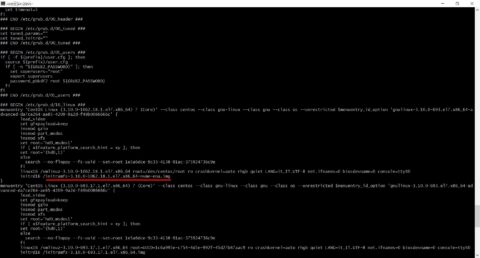

Now you have to edit your grub config file (/boot/grub2/grub.cfg) and change your first menu entry switching from the old init image to the new one.

Save /boot/grub2/grub.cfg file, CHECK AGAIN YOU HAVE A GOOD SNAPSHOT OR AN AMI, and reboot, nothing should have changed.

Now you can make a new snapshot or AMI and build a new instance from it, choose a C type instance and now it should be able to boot properly.

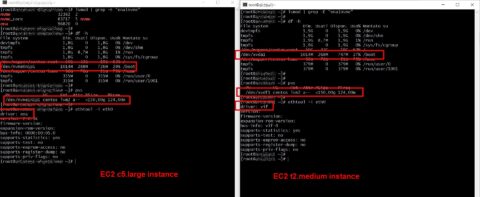

As you can see the new C5 instance have different storage device names, it has a new nic driver (ena) and it has ena and nvme modules loaded.

Life should be easier without the cloud… again.

![[ Celebrate 30 years of GNU! ]](https://tasslehoff.burrfoot.it/wp-content/uploads/2013/11/GNU_30th_badge.png)